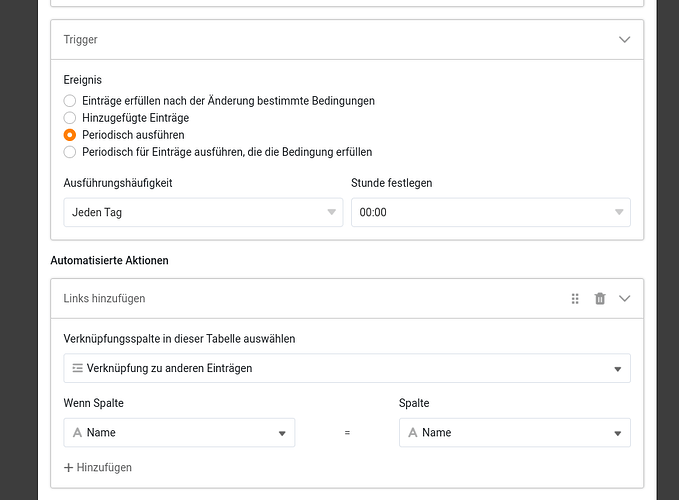

Hey, due to an issue with the automation of SeaTable, and because it doesn’t work automatically, we have to manually create (or update) a link between two tables every day.

To ensure this works even when our responsible employee is unavailable, I would like to write a Python script to handle this task.

Das habe ich auch erfolgriech geschaft:

def link_rows(self, table1_name, table2_name, link_column, key_column):

"""

Link rows between two tables based on a common key column.

"""

try:

link_id = self.get_link_id(table1_name, link_column)

except Exception as e:

raise Exception(f"Failed to get link ID: {e}")

try:

table1_data = self.get_table(table1_name)

table2_data = self.get_table(table2_name)

except Exception as e:

raise Exception(f"Failed to retrieve table data: {e}")

try:

table2_dict = {row[key_column]: row['_id'] for row in table2_data}

except KeyError as e:

raise Exception(f"Key column '{key_column}' not found in table '{table2_name}': {e}")

row_id_list = []

other_rows_ids_map = {}

for row in table1_data:

employer_id = row.get(key_column)

if employer_id in table2_dict:

row_id_list.append(row['_id'])

other_rows_ids_map[row['_id']] = [table2_dict[employer_id]]

try:

result = self.base.batch_update_links(link_id, table1_name, table2_name, row_id_list, other_rows_ids_map)

print(result)

print("Linking successful")

except Exception as e:

raise Exception(f"Failed to link rows: {e}")

Now, we are working with tables that have 40,000 or more entries, but using get_table and list_rows, I can only retrieve 1,000 entries at a time. At the same time, I don’t want to increase the number of requests since we have a daily limit.

I’m not sure if the same limitation applies to batch_update_links, but I assume it does.

Is there a solution for pulling and linking large datasets in Python or JavaScript?